Much like the Accounts Receivable ages debtor accounts function in any business, we can age application user accounts that have never connected to the application or have not connected in some time via the full and self-service screens.

Why would we want to do this? Having people who have long left the business retaining active accounts is a security issue, and all the best managed sites have processes in place to check that such accounts are being closed on a timely basis.

It is possible to generate a list of application user accounts that have either never connected or have not connected in the last 120 days.

The first step is to identify the number of accounts you have that are candidates for review:

SELECT count(*)

FROM applsys.fnd_user fu

WHERE (fu.last_logon_date is null

or (sysdate - fu.last_logon_date) >= 120 )

and (fu.end_date is null

or fu.end_date > sysdate )

and fu.user_name not in ('SYSADMIN','GUEST');

The second step is to produce a list of application accounts that are candidates for review:

SELECT fu.user_id,

fu.user_name,

substr(fu.description, 1, 40)

decode(sign(length(fu.description) - 40), 1, '...') description,

nvl(to_char(fu.last_logon_date, 'DD-Mon-YY HH24:MI'), 'Never Connected')

last_connect_date,

decode(fu.last_logon_date, null, null,

round( (sysdate - fu.last_logon_date),0) ) days_since_last_connect

FROM applsys.fnd_user fu

WHERE (fu.last_logon_date is null

or (sysdate - fu.last_logon_date) >= 120 )

and (fu.end_date is null

or fu.end_date > sysdate )

and fu.user_name not in ('SYSADMIN','GUEST')

ORDER by decode(fu.last_logon_date, null, (sysdate - 10000), fu.last_logon_date),

fu.user_name;

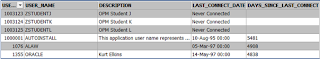

The output will look something like the following example:

Remember: Not all Aged User Accounts are candidates for end dating:

There are of course exceptions, those accounts that must exist but are never used and those that are used very infrequently. You can exclude these by simply adding these accounts to your SQL statement:

and fu.user_name not in (‘SYSADMIN’,’GUEST’)

You should run the second report say once per month as part of your normal application maintenance routine and send it to your HR department or whoever manages your application user accounts. It shows the business you are on top of managing the application and the business loves anything to do with security...

About this blog

As with my website, my blogs espouse the philosophy “not all performance problems are technical” and that in most stable environments user behaviour / usage patterns are typically the source of most correctable performance problems. So if you are into bits, bytes, locks, latches etc this is not the place for you, but if you are after ways to genuinely enhance performance and work with your systems and customers to achieve better, more predictable performance, read on.

Friday, December 17, 2010

Friday, November 19, 2010

Is the number of concurrent manager processes you have causing performance issues?

All too often I see sites with far too many concurrent manager processes and the site wonders why they have intermittent performance issues. Remember, having more concurrent manager processes does not mean more throughput.

So, how many concurrent manager processes should you have?

Add up the number of standard and custom concurrent manager processes you have. If that value exceeds 2 times the number of CPUs (multi core adjusted) on the box you most likely have too many manager processes. If you are experiencing intermittent performance issues, particularly around high processing times like financial month ends, too many manager processes would most likely be one of your reasons.

The following SQL will list your concurrent managers:

SELECT concurrent_queue_name,

max_processes,

running_processes,

nvl(sleep_seconds,0) sleep_seconds,

cache_size,

decode(enabled_flag,

'Y', 'Enabled', 'N', 'Disabled', 'Unknown' ) status

FROM applsys.fnd_concurrent_queues

ORDER by decode(enabled_flag, 'Y', 1, 'N', 0, enabled_flag ) DESC,

max_processes DESC,

decode(concurrent_queue_name,

'FNDICM', 'AA',

'FNDCRM', 'AB',

'STANDARD', 'AC',

concurrent_queue_name);

A real world example of too many managers:

A site I reviewed had 54 standard manager processes on a 4 CPU box - 54 / 4 = 13.5

In this example there can be up to 54 concurrent requests running through the standard managers at any time which will just plain flood the CPUs. Generally running two (2) concurrent requests per CPU is sufficient to leave enough overhead for normal processing activities; any more than that and the risk increases of CPU flooding. And you would hope this site does run too many FSG’s... as we all know the damage they can do...

Believe it or not I have even seen 108 standard managers on an 8 CPU box..... Hmmm.....

Flooding the CPUs with concurrent requests leaves very little available CPU for normal user requests. As a result users tend to experience poor performance with their forms etc... It’s basic queuing theory... To make matters worse, these intermittent performance issues tend to occur around peak processing times when the concurrent manager load is at its peak; exactly the time users are clearly very busy and don’t want to be experiencing performance issues.

If you have a “problem” with an excessive number of standard and custom concurrent managers processes, what you need to do is lower the number of processes. This is easier said than done, as once they exist the business is very reluctant to let them go. But it is worth persisting as it will make a difference!

For more information refer to the paper I wrote:

http://www.piper-rx.com/pages/papers/cm101.pdf

Believe it or not, and much to my surprise, this is the most down loaded paper on my web site. Even though I wrote this paper in 2004, it still holds true today.... not much changes in OEBS, and for good reason; stability in accounting and business systems is what businesses want.

So, how many concurrent manager processes should you have?

Add up the number of standard and custom concurrent manager processes you have. If that value exceeds 2 times the number of CPUs (multi core adjusted) on the box you most likely have too many manager processes. If you are experiencing intermittent performance issues, particularly around high processing times like financial month ends, too many manager processes would most likely be one of your reasons.

The following SQL will list your concurrent managers:

SELECT concurrent_queue_name,

max_processes,

running_processes,

nvl(sleep_seconds,0) sleep_seconds,

cache_size,

decode(enabled_flag,

'Y', 'Enabled', 'N', 'Disabled', 'Unknown' ) status

FROM applsys.fnd_concurrent_queues

ORDER by decode(enabled_flag, 'Y', 1, 'N', 0, enabled_flag ) DESC,

max_processes DESC,

decode(concurrent_queue_name,

'FNDICM', 'AA',

'FNDCRM', 'AB',

'STANDARD', 'AC',

concurrent_queue_name);

Example Output

A real world example of too many managers:

A site I reviewed had 54 standard manager processes on a 4 CPU box - 54 / 4 = 13.5

In this example there can be up to 54 concurrent requests running through the standard managers at any time which will just plain flood the CPUs. Generally running two (2) concurrent requests per CPU is sufficient to leave enough overhead for normal processing activities; any more than that and the risk increases of CPU flooding. And you would hope this site does run too many FSG’s... as we all know the damage they can do...

Believe it or not I have even seen 108 standard managers on an 8 CPU box..... Hmmm.....

Flooding the CPUs with concurrent requests leaves very little available CPU for normal user requests. As a result users tend to experience poor performance with their forms etc... It’s basic queuing theory... To make matters worse, these intermittent performance issues tend to occur around peak processing times when the concurrent manager load is at its peak; exactly the time users are clearly very busy and don’t want to be experiencing performance issues.

If you have a “problem” with an excessive number of standard and custom concurrent managers processes, what you need to do is lower the number of processes. This is easier said than done, as once they exist the business is very reluctant to let them go. But it is worth persisting as it will make a difference!

For more information refer to the paper I wrote:

http://www.piper-rx.com/pages/papers/cm101.pdf

Believe it or not, and much to my surprise, this is the most down loaded paper on my web site. Even though I wrote this paper in 2004, it still holds true today.... not much changes in OEBS, and for good reason; stability in accounting and business systems is what businesses want.

Saturday, November 6, 2010

Case Review - How did I manage to get 30 million rows in my fnd_logins table?

The site in presented with over 30 million rows in the fnd_logins table, growing at a rate of approximately 25,000 records per day.

Based on the site’s application activity, the estimated number of rows that should be held in the fnd_logins table should be around 800,000 records (i.e. holding 32 days history on-line). It is expected that this number should reduce post concurrent manager activity review.

The site was running the concurrent program Purge Signon Audit data (FNDSCPRG) daily as part of their normal maintenance program to purge the sign-on audit data and was unaware of the high growth rate in the sign-on audit tables.

On review of the site’s scheduled requests it was found that the Purge Signon Audit data was being run daily with the date argument set to 10-Oct-06, however, “the increment date parameter each run” check box had not been checked when the scheduled request was created. As such the data parameter has not been incrementing with each run, thus with each run the program has been purging all sign-on audit records prior to 10-Oct-06. As a result any record added after that date has not been being purged.

So, since 10-Oct-06 (Over 1,200 days at the time of presentation) the program has been running but purging nothing.

Whilst there are several inherent performance issues, the biggest impact to the application would be when the concurrent program runs. The concurrent program Purge Signon Audit data (FNDSCPRG) uses an un-indexed column start_time in its execution. As such the purge program will execute a full scan on each of the target tables in order to determine the rows to delete. So for this site, that would include a daily full scan of a 30 million to not find any rows to delete.

The case review shows how I would “Fix” the issue including deleting the unwanted rows and setting up a new Purge Signon Audit data scheduled program run.

The full case review can be found at -

http://www.piper-rx.com/pages/case_reviews/case_sign_on_audit.pdf

Based on the site’s application activity, the estimated number of rows that should be held in the fnd_logins table should be around 800,000 records (i.e. holding 32 days history on-line). It is expected that this number should reduce post concurrent manager activity review.

The site was running the concurrent program Purge Signon Audit data (FNDSCPRG) daily as part of their normal maintenance program to purge the sign-on audit data and was unaware of the high growth rate in the sign-on audit tables.

On review of the site’s scheduled requests it was found that the Purge Signon Audit data was being run daily with the date argument set to 10-Oct-06, however, “the increment date parameter each run” check box had not been checked when the scheduled request was created. As such the data parameter has not been incrementing with each run, thus with each run the program has been purging all sign-on audit records prior to 10-Oct-06. As a result any record added after that date has not been being purged.

So, since 10-Oct-06 (Over 1,200 days at the time of presentation) the program has been running but purging nothing.

Whilst there are several inherent performance issues, the biggest impact to the application would be when the concurrent program runs. The concurrent program Purge Signon Audit data (FNDSCPRG) uses an un-indexed column start_time in its execution. As such the purge program will execute a full scan on each of the target tables in order to determine the rows to delete. So for this site, that would include a daily full scan of a 30 million to not find any rows to delete.

The case review shows how I would “Fix” the issue including deleting the unwanted rows and setting up a new Purge Signon Audit data scheduled program run.

The full case review can be found at -

http://www.piper-rx.com/pages/case_reviews/case_sign_on_audit.pdf

Sunday, October 10, 2010

Better Date and Elapsed Time Reporting Formats for Business Users

I am a business user, so why say to me “it ran for 0.049 days”?

All too often I see reports created by technical staff and DBAs being presented to management and business users with elapsed times (such as concurrent request run times) calculated in days or seconds and this often causes frustration amongst report recipients. Here are some typical examples:-

• A concurrent request run time of 1 hour and 10 minutes is commonly reported as:

What you should remember when dealing with management or users is they usually hate having to perform mental gymnastics to calculate a time, what is 0.02 days or 1,454 seconds?

Would it not be better if the time was quoted as 28 minutes? I believe it’s always preferable when presenting information to management and business users to convert elapsed time to Days, Hours and Minutes.

The following is an example report showing both YY:MM:DD and DD:HH:MI formats:

Remember; always make life easier for your target user. They will appreciate it and in turn will be more likely to be supportive when you need it!

All too often I see reports created by technical staff and DBAs being presented to management and business users with elapsed times (such as concurrent request run times) calculated in days or seconds and this often causes frustration amongst report recipients. Here are some typical examples:-

• A concurrent request run time of 1 hour and 10 minutes is commonly reported as:

- 0.0486 days or

- 4,200 seconds

What you should remember when dealing with management or users is they usually hate having to perform mental gymnastics to calculate a time, what is 0.02 days or 1,454 seconds?

Would it not be better if the time was quoted as 28 minutes? I believe it’s always preferable when presenting information to management and business users to convert elapsed time to Days, Hours and Minutes.

The following is an example report showing both YY:MM:DD and DD:HH:MI formats:

The full article including example SQL can be found at:

http://www.piper-rx.com/pages/tips/date_time_formatting.pdf Remember; always make life easier for your target user. They will appreciate it and in turn will be more likely to be supportive when you need it!

Tuesday, September 21, 2010

A workflow Case Review on how to lower the number of Workflow Background Processes

Having encountered a number of sites running upward of over 5,000 workflow background processes per day, in some cases over 15,000 per day, it never ceases to amaze me how so many sites get themselves into so much trouble running far too many background processes. Not only does this thrash your application but it also has an impact on your concurrent request tables as each request adds a record in the fnd_concurrent_requests table for each run.

Just recently I have been corresponding with a site that contacted me regarding issues with their workflow application and this has prompted me to put together a case review to articulate how I would look to resolve the issue of too many workflow background processes for the particular scenario outlined.

The full case review can be found on my web site:

http://www.piper-rx.com/pages/case_reviews/case_wf_bgp.pdf

It’s pointless looking for poor performing code with this issue…

-Gary

Just recently I have been corresponding with a site that contacted me regarding issues with their workflow application and this has prompted me to put together a case review to articulate how I would look to resolve the issue of too many workflow background processes for the particular scenario outlined.

The full case review can be found on my web site:

http://www.piper-rx.com/pages/case_reviews/case_wf_bgp.pdf

It’s pointless looking for poor performing code with this issue…

This is a good example of my statement “Not all performance issues are solved by code tuning”.

-Gary

Monday, September 13, 2010

Shortening large columns in user facing reports

Where a database column and its content is larger than the size you wish to show in a report, it is easy to just substring the column value to fit your report column size. However, this often does not look that good in user facing reports.

To demonstrate this concept below I have used the application users description column in applsys.fnd_user which is a varchar2(240)...

SELECT fu.user_id user_id,

fu.user_name user_name,

fu.description description

FROM applsys.fnd_user fu;

In most cases when you write a report you substring the column to the size of the column in your report. Whilst sub stringing the column value is the easy solution and in your mind acceptable, you should remember your reports are aimed at end users wouldn’t it look more professional if you added three (3) full stops to a sub stringed value to clearly indicate the column has been truncated.

SELECT fu.user_id user_id,

fu.user_name user_name,

substr(fu.description, 1, 30)

decode(sign(length(fu.description) - 30), 1, '...') description

FROM applsys.fnd_user fu;

If your report column size is 30, set the two (2) values in the example SQL to 27, that is 27 characters plus 3 for the full stops.

A very simple piece of code but what a difference. You should consider using this for any user report where a data column that has been truncated.

Remember:

“Always make life easier for your target user”

- Gary

To demonstrate this concept below I have used the application users description column in applsys.fnd_user which is a varchar2(240)...

SELECT fu.user_id user_id,

fu.user_name user_name,

fu.description description

FROM applsys.fnd_user fu;

In most cases when you write a report you substring the column to the size of the column in your report. Whilst sub stringing the column value is the easy solution and in your mind acceptable, you should remember your reports are aimed at end users wouldn’t it look more professional if you added three (3) full stops to a sub stringed value to clearly indicate the column has been truncated.

SELECT fu.user_id user_id,

fu.user_name user_name,

substr(fu.description, 1, 30)

decode(sign(length(fu.description) - 30), 1, '...') description

FROM applsys.fnd_user fu;

If your report column size is 30, set the two (2) values in the example SQL to 27, that is 27 characters plus 3 for the full stops.

A very simple piece of code but what a difference. You should consider using this for any user report where a data column that has been truncated.

Remember:

“Always make life easier for your target user”

- Gary

Saturday, August 28, 2010

General Ledger - GL Program Optimizer

The General Ledger - GL program optimizer is a concurrent program that is available to the GL super user responsibility and it has two functions:

The program collects and stores information on the spread of balances in gl.gl_balances which is used by both the GL posting program and FSGs. Given that the number of balances in gl.gl_balances increases each month with the month end roll process, it is important for the overall performance of posting and FSGs to keep the GL program optimizer stats up to date.

When was the GL Program Optimizer last run?

The following SQL shows you when the GL program optimizer was last run and by whom:

SELECT fu.user_name || ' - ' ||fu.description "Last Run By",

to_char(max(rts.last_update_date),'DD-Mon-YY HH24:MI') "Last Run",

trunc(sysdate - max(rts.last_update_date)) "Age (Days)"

FROM rg.rg_table_sizes rts,

applsys.fnd_user fu

WHERE rts.last_updated_by = fu.user_id

GROUP by fu.user_name, fu.description;

It is not uncommon for the program to have never been run.

Warning: Do not set the Rebuild Indexes option to Yes as this could cause more damage and may have an adverse effect on the entire General Ledger application. FYI Older releases dropped existing code combination indexes and rebuild indexes as defined in the flexfield definitions. Current releases will maintain existing indexes and build only those that have been defined in the flexfiled definitions that do not currently exist.

Given the above warning, what we don’t want is any of the GL super users finding the GL program optimizer program and running it thinking they are doing the right thing; I have been there and it’s not pretty. That’s why I always remove it from the GL super user responsibility and add it to the Systems Administrator responsibility which they cannot get to.

- Collect statistics to aid in the running of FSGs and posting

- Build indexes on gl_code_conbinations where they do not exist.

The program collects and stores information on the spread of balances in gl.gl_balances which is used by both the GL posting program and FSGs. Given that the number of balances in gl.gl_balances increases each month with the month end roll process, it is important for the overall performance of posting and FSGs to keep the GL program optimizer stats up to date.

When was the GL Program Optimizer last run?

The following SQL shows you when the GL program optimizer was last run and by whom:

SELECT fu.user_name || ' - ' ||fu.description "Last Run By",

to_char(max(rts.last_update_date),'DD-Mon-YY HH24:MI') "Last Run",

trunc(sysdate - max(rts.last_update_date)) "Age (Days)"

FROM rg.rg_table_sizes rts,

applsys.fnd_user fu

WHERE rts.last_updated_by = fu.user_id

GROUP by fu.user_name, fu.description;

It is not uncommon for the program to have never been run.

This program is run by logging onto the GL super user responsibility and then running the concurrent program - GL Program Optimizer.

When running the GL Program Optimizer there are two options:

- Collect Statistics (Default Yes) -Set this value to Yes

- Rebuild Indexes (Default No) - Set this value to No

Warning: Do not set the Rebuild Indexes option to Yes as this could cause more damage and may have an adverse effect on the entire General Ledger application. FYI Older releases dropped existing code combination indexes and rebuild indexes as defined in the flexfield definitions. Current releases will maintain existing indexes and build only those that have been defined in the flexfiled definitions that do not currently exist.

Given the above warning, what we don’t want is any of the GL super users finding the GL program optimizer program and running it thinking they are doing the right thing; I have been there and it’s not pretty. That’s why I always remove it from the GL super user responsibility and add it to the Systems Administrator responsibility which they cannot get to.

How often should GL Program Optimizer be run?

I generally set this up as a scheduled job to be run once per month, a couple of days after the month end roll over.

Good Luck....

Friday, August 13, 2010

What OEBS Application modules are installed?

The following genreates a list of the OEBS Application modules you have installed including their version and patch levels...

SELECT fa.application_id appn_id,

decode(fa.application_short_name,

'SQLAP', 'AP', 'SQLGL', 'GL', fa.application_short_name ) appn_short_name,

substr(fat.application_name,1,50)

decode(sign(length(fat.application_name) - 50), 1, '...') Application,

fa.basepath Base_path,

fl.meaning install_status,

nvl(fpi.product_version, 'Not Available') product_version,

nvl(fpi.patch_level, 'Not Available') patch_level,

to_char(fa.last_update_date, 'DD-Mon-YY (Dy) HH24:MI') last_update_date,

nvl(fu.user_name, '* Install *') Updated_by

FROM applsys.fnd_application fa,

applsys.fnd_application_tl fat,

applsys.fnd_user fu,

applsys.fnd_product_installations fpi,

apps.fnd_lookups fl

WHERE fa.application_id = fat.application_id

and fat.language(+) = userenv('LANG')

and fa.application_id = fpi.application_id

and fpi.last_updated_by = fu.user_id(+)

and fpi.status = fl.lookup_code

and fl.lookup_type = 'FND_PRODUCT_STATUS'

UNION ALL

SELECT fa.application_id,

fa.application_short_name,

substr(fat.application_name,1,50)

decode(sign(length(fat.application_name) - 50), 1, '...'),

fa.basepath,

'Not Available',

'Not Available',

'Not Available',

to_char(fa.last_update_date, 'DD-Mon-YY (Dy) HH24:MI'),

nvl(fu.user_name, '* Install *')

FROM applsys.fnd_application fa,

applsys.fnd_application_tl fat,

applsys.fnd_user fu

WHERE fa.application_id = fat.application_id

and fat.language(+) = userenv('LANG')

and fa.last_updated_by = fu.user_id(+)

and fa.application_id not in

( SELECT fpi.application_id

FROM applsys.fnd_product_installations fpi )

ORDER by 2;

Example output:

Note: For readability I have converted the SQLAP to AP and SQLGL to GL – this looks so much better for users...

Hope this is useful....

SELECT fa.application_id appn_id,

decode(fa.application_short_name,

'SQLAP', 'AP', 'SQLGL', 'GL', fa.application_short_name ) appn_short_name,

substr(fat.application_name,1,50)

decode(sign(length(fat.application_name) - 50), 1, '...') Application,

fa.basepath Base_path,

fl.meaning install_status,

nvl(fpi.product_version, 'Not Available') product_version,

nvl(fpi.patch_level, 'Not Available') patch_level,

to_char(fa.last_update_date, 'DD-Mon-YY (Dy) HH24:MI') last_update_date,

nvl(fu.user_name, '* Install *') Updated_by

FROM applsys.fnd_application fa,

applsys.fnd_application_tl fat,

applsys.fnd_user fu,

applsys.fnd_product_installations fpi,

apps.fnd_lookups fl

WHERE fa.application_id = fat.application_id

and fat.language(+) = userenv('LANG')

and fa.application_id = fpi.application_id

and fpi.last_updated_by = fu.user_id(+)

and fpi.status = fl.lookup_code

and fl.lookup_type = 'FND_PRODUCT_STATUS'

UNION ALL

SELECT fa.application_id,

fa.application_short_name,

substr(fat.application_name,1,50)

decode(sign(length(fat.application_name) - 50), 1, '...'),

fa.basepath,

'Not Available',

'Not Available',

'Not Available',

to_char(fa.last_update_date, 'DD-Mon-YY (Dy) HH24:MI'),

nvl(fu.user_name, '* Install *')

FROM applsys.fnd_application fa,

applsys.fnd_application_tl fat,

applsys.fnd_user fu

WHERE fa.application_id = fat.application_id

and fat.language(+) = userenv('LANG')

and fa.last_updated_by = fu.user_id(+)

and fa.application_id not in

( SELECT fpi.application_id

FROM applsys.fnd_product_installations fpi )

ORDER by 2;

Example output:

Note: For readability I have converted the SQLAP to AP and SQLGL to GL – this looks so much better for users...

Hope this is useful....

Subscribe to:

Posts (Atom)